Real-time tracking and stimulus targeting

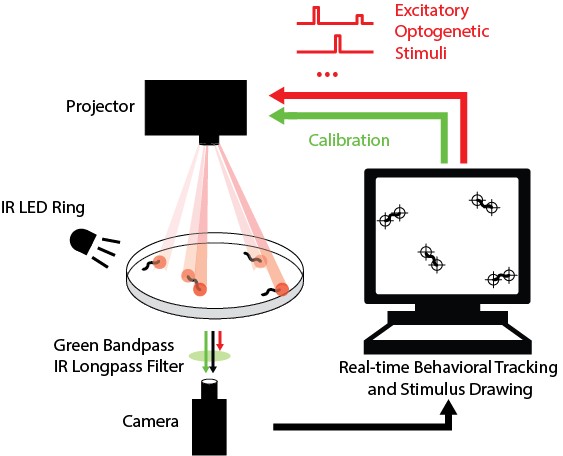

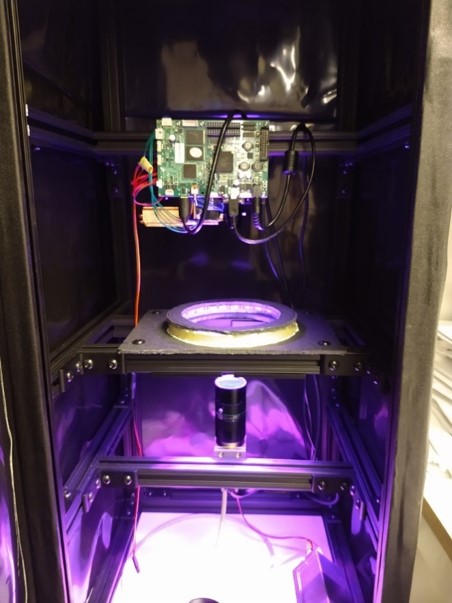

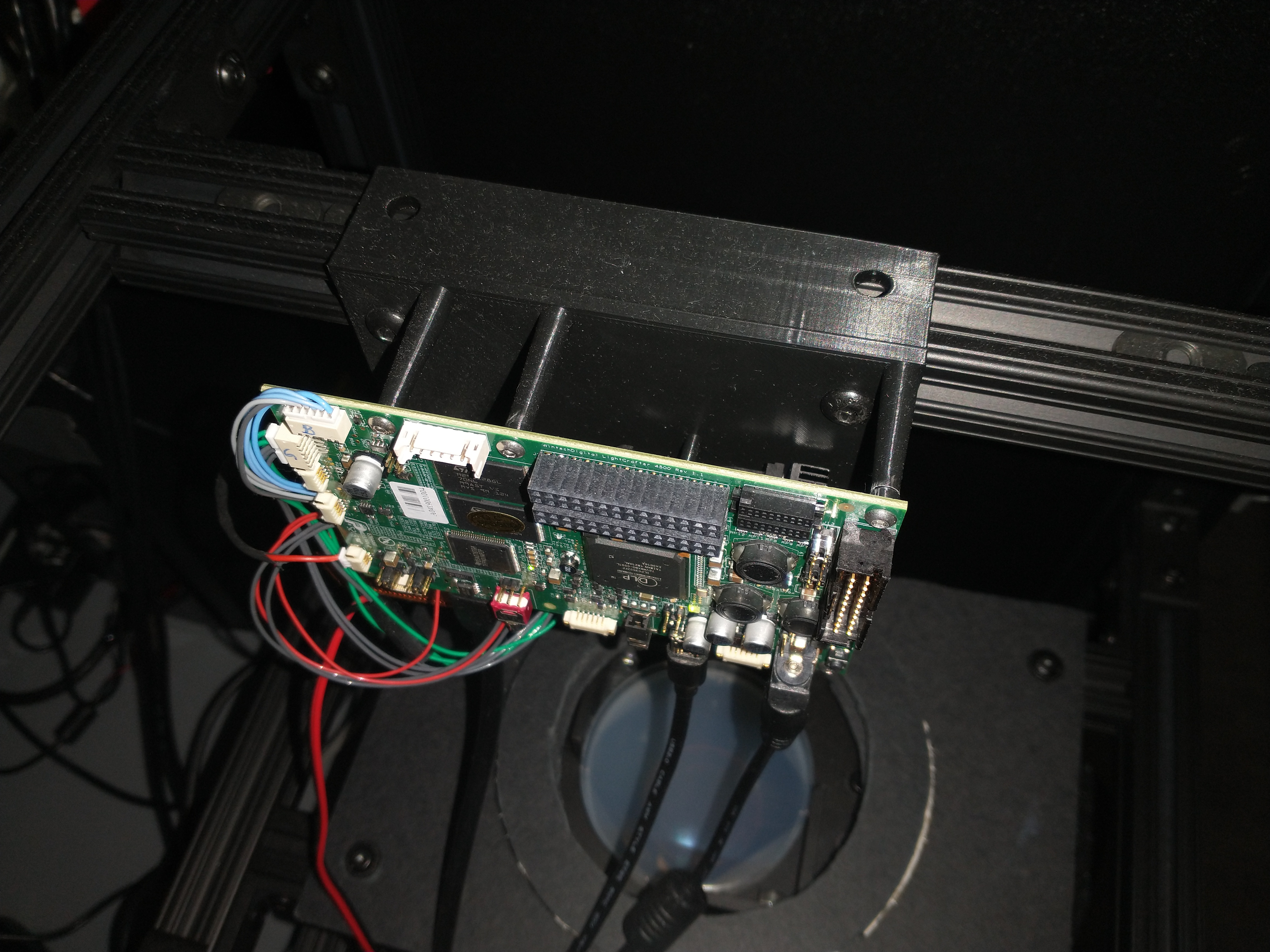

While highly informative to our understanding of worm touch circuitry, the my previous work has several limitations that my current research efforts seek to overcome: (1) the light stimulus used is only a single color, which prevents multiplexing excitatory and inhibitory stimulation in the same experiment; (2) the stimulus intensity is shared for entire plate, which means we cannot study how the touch stimulus affects the head or the tail separately; and (3) stimulation was agnostic to the animal’s behavior, which makes it challenging to gather stimulus reponse during rare behaviors. So I designed an instrument that can probe worm behavioral response to spatially coherent inhibitory and excitatory optogenetic stimuli, with real-time behavior segmentation and stimulus targeting for many animals in parallel. This work is currently in preparation for publication.

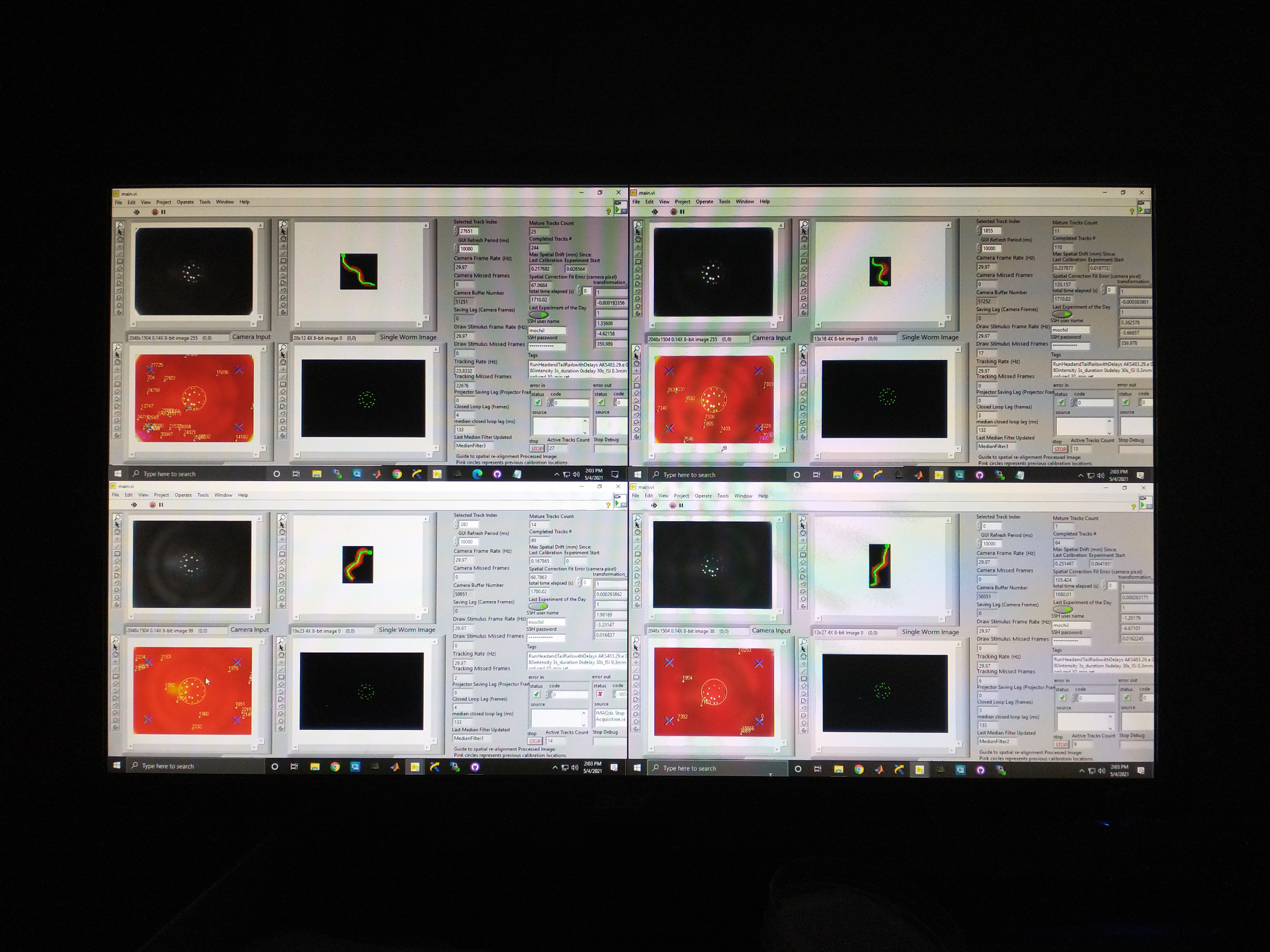

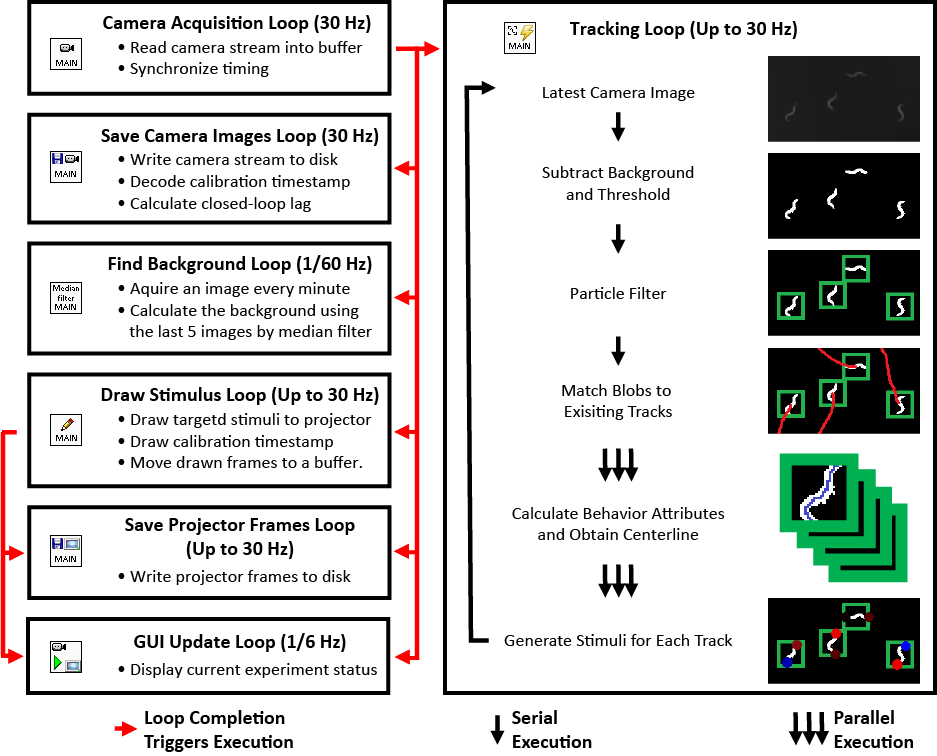

While my the previous work also utilize custom LabVIEW Virtual Instruments (VIs) for stimulation and capturing video, the control software here features a great deal more sophistication. It is able to track worms, extract centerlines along with other behavioral metrics, and draw spatially and temporally customized stimuli, all in real time at up to 30 Hz (the camera frame rate).

Although it is possible to run the software on older machines, the software takes advantage of the high number of cores available to a modern computer to minimize the real-time behavioral feedback lag. The computers we picked employ the latest processor with 32 cores (Threadripper 3970X, AMD). These cores ensure that the LabVIEW environment has plenty of parallelized computing power to reduce the number of frames dropped in tracking, saving, and stimulus drawing. The computer also has 6 TB total of PCIe Gen4 solid state storage to enable us to save raw camera and projector images in real time. This way, we can reduce latency by doing the image compression in post-processing.

Using this instrument, we can not only study how the animal integrates head and tail touch stimulus, but also how it processes information during rare behaviors such as turning.

Finally, to even further increase throughput, we scaled from a single prototype intrument to 4 identical instruments.